Yu Lu

Postdoctoral Researcher

Zhejiang UniversityEmail: aniki.yulu [AT] gmail dot com

Yu Lu (路雨) is a Postdoctoral Researcher at Zhejiang University, where he is leading a small research team that mostly works on video and image generative models. Before that, he obtained his Ph.D. degree at University of Technology Sydney (UTS). His academic advisor is Prof. Yi Yang. In past years, he worked at tech institutions including WeXin Group (Tencent), Kwai Tech, Tencent AI Lab, and Baidu Research.

We are actively looking for PhD students, visiting students, and research interns at Zhejiang University or our collaborating tech institutions. If you are interested in our research, please do not hesitate to contact me.

News

- Sep 2025: FlexSelect and ICEdit accepted by NeurIPS 2025!

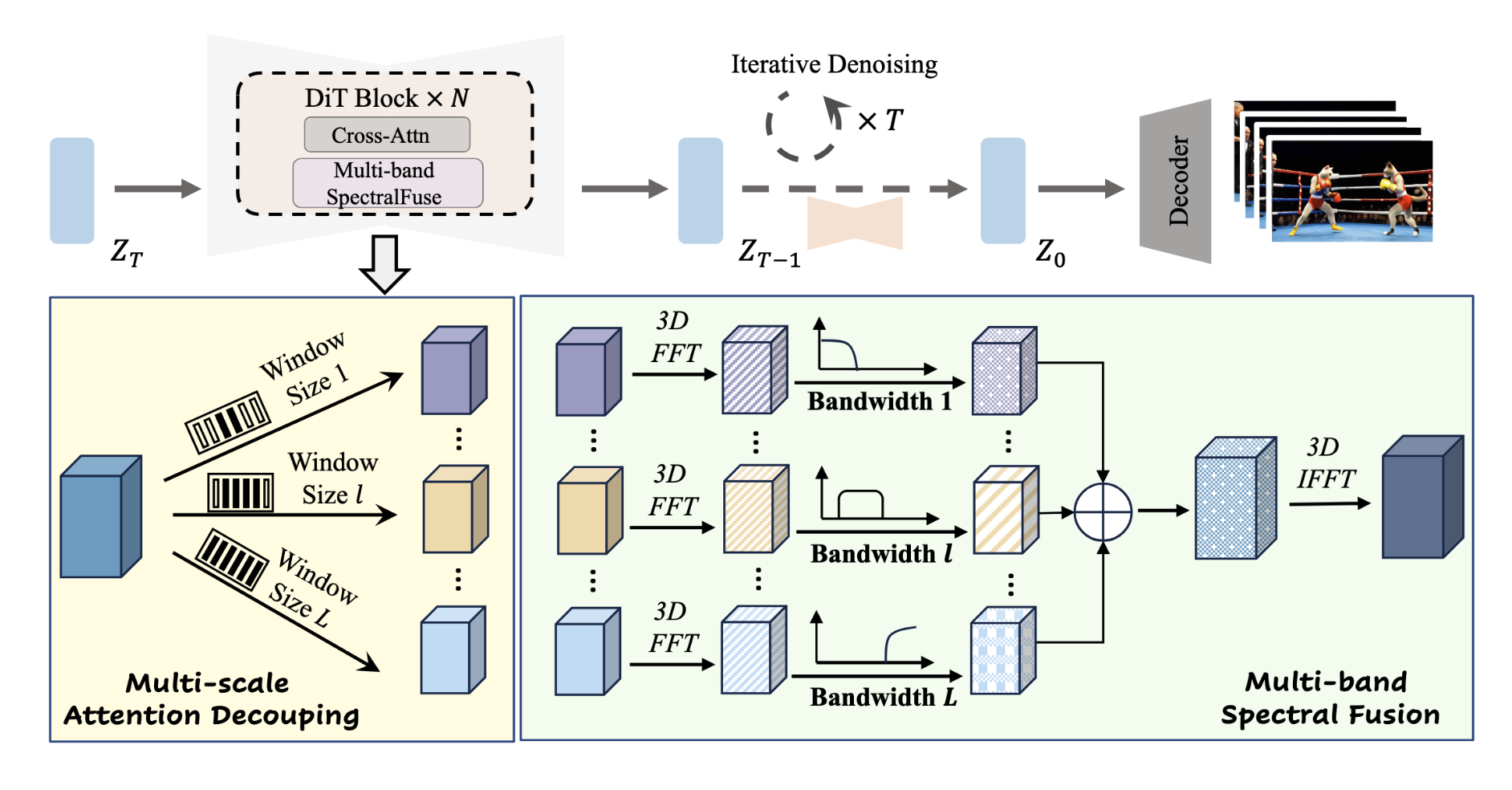

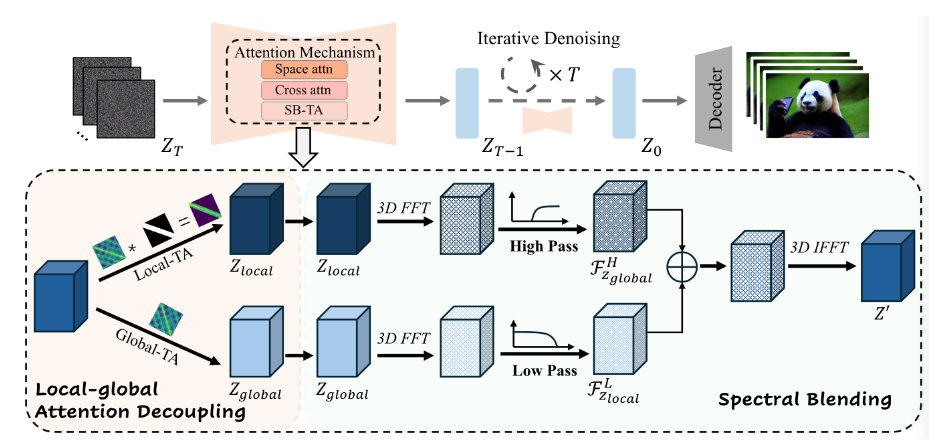

- July 2025: We have released the paper FreeLong++ for long video generation with 8x longer extension!

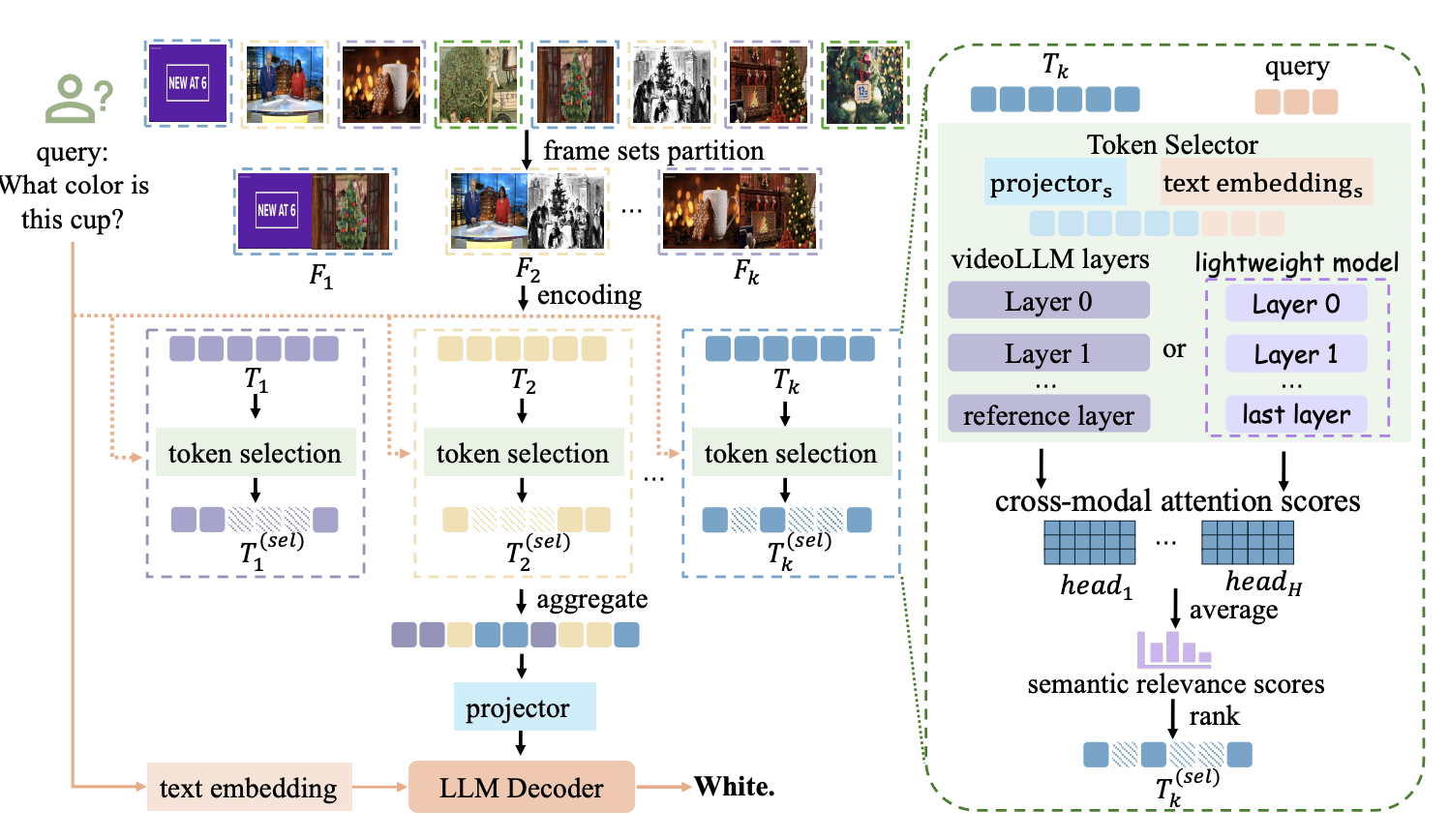

- June 2025: We have released the paper FlexSelect for long video understanding with 9x faster than baseline!

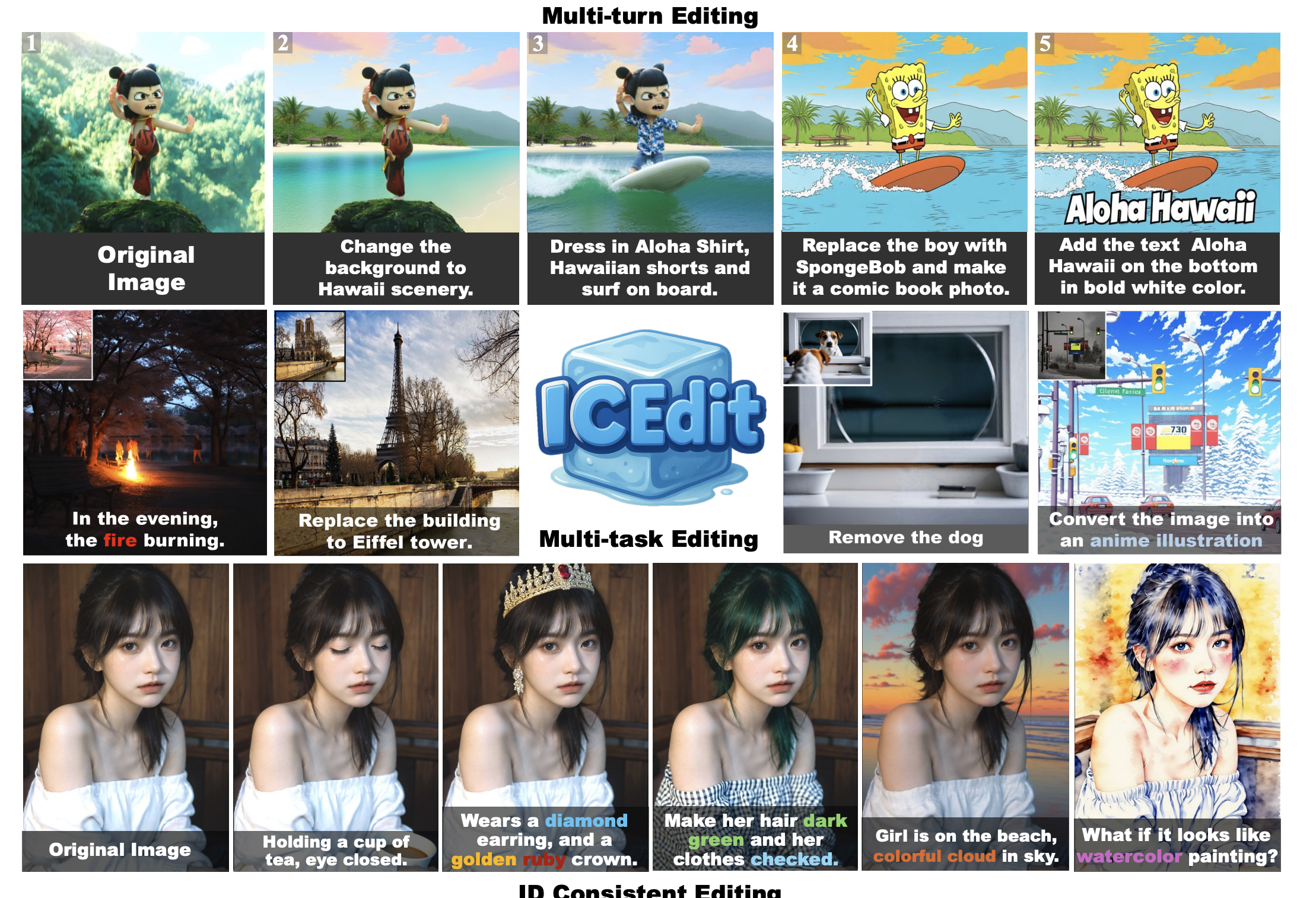

- May 2025: We have released the paper In-context edit for image editing!

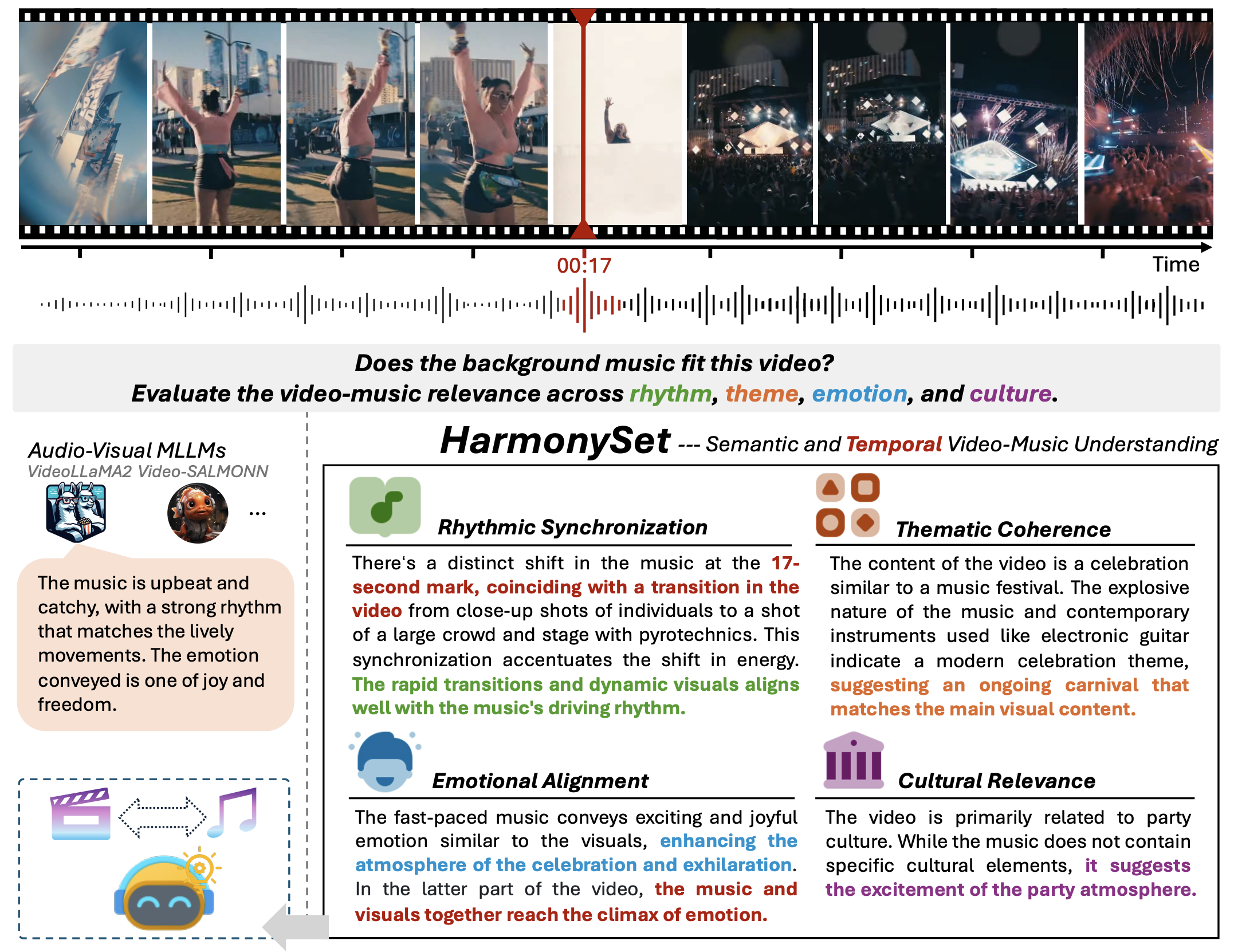

- Feb 2025: HarmonySet accepted by CVPR 2025!

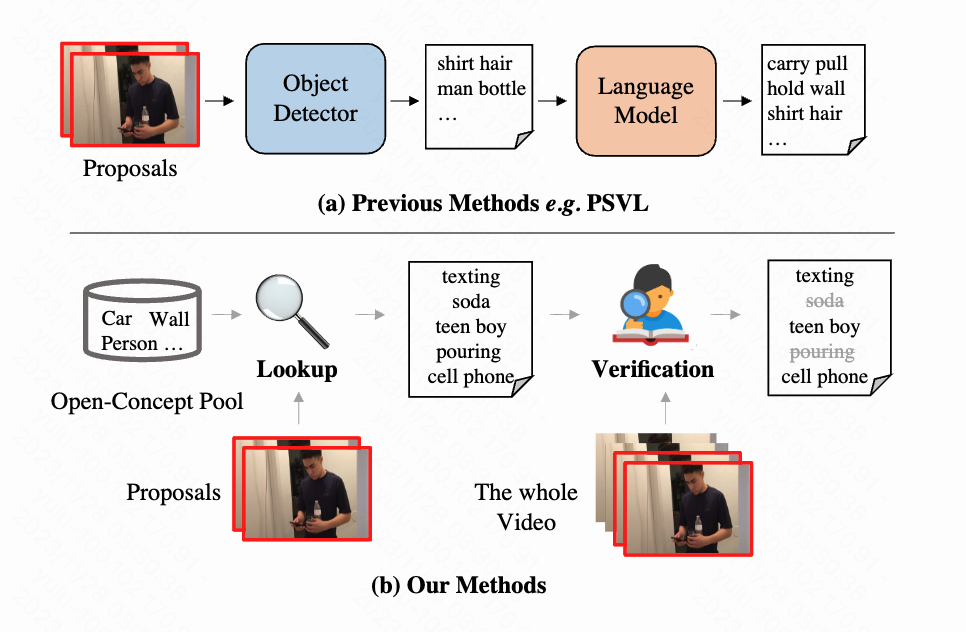

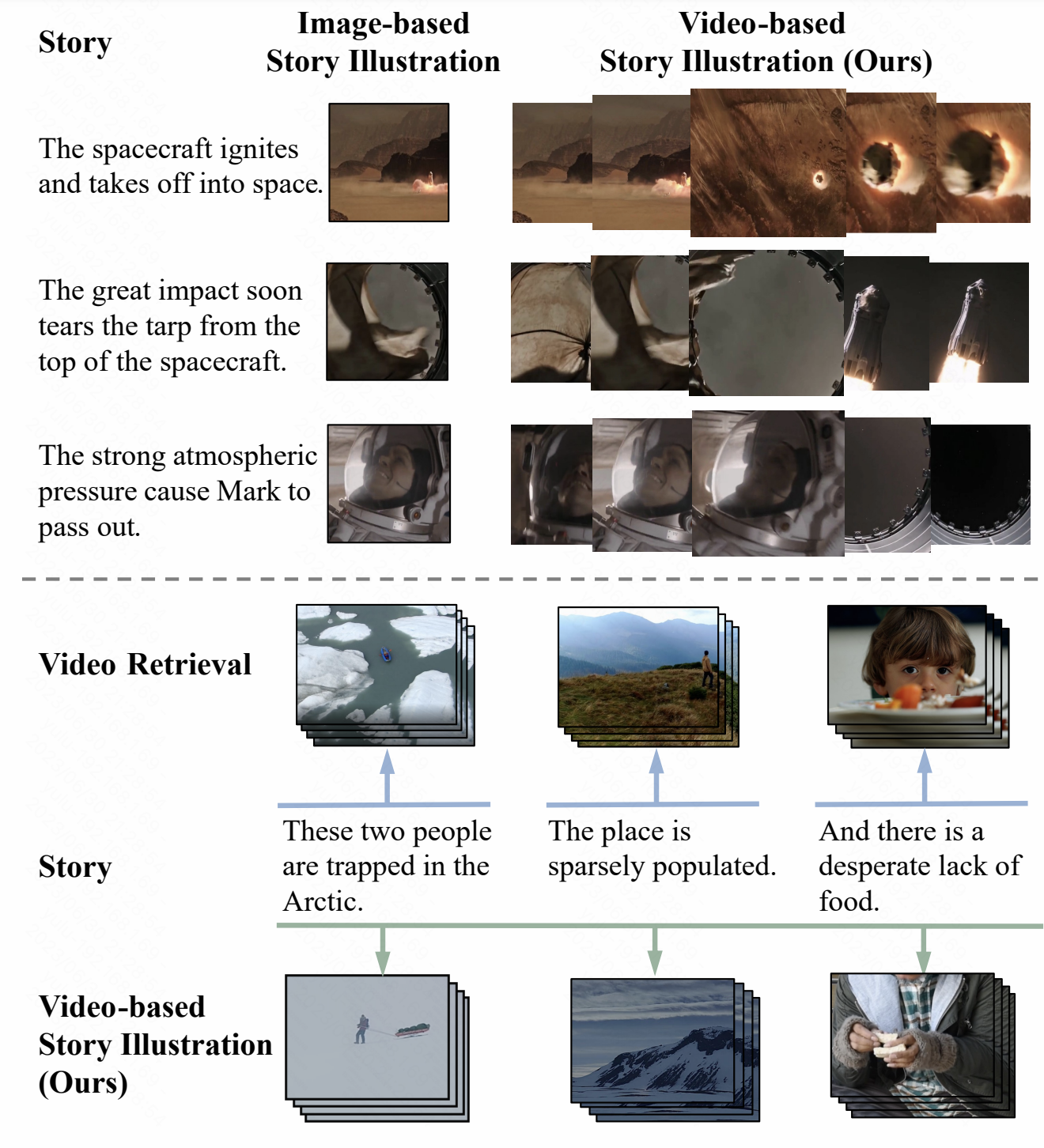

- Dec 2024: Paper on Video-Text Retrieval with unlabeled videos accepted by TIP 2024

- Sep 2024: Two papers (FreeLong and AMP) accepted by NeurIPS 2024!

- Sep 2024: Successfully defended Ph.D. thesis "Zero-shot Natural Language-Driven Video Analysis and Synthesis"

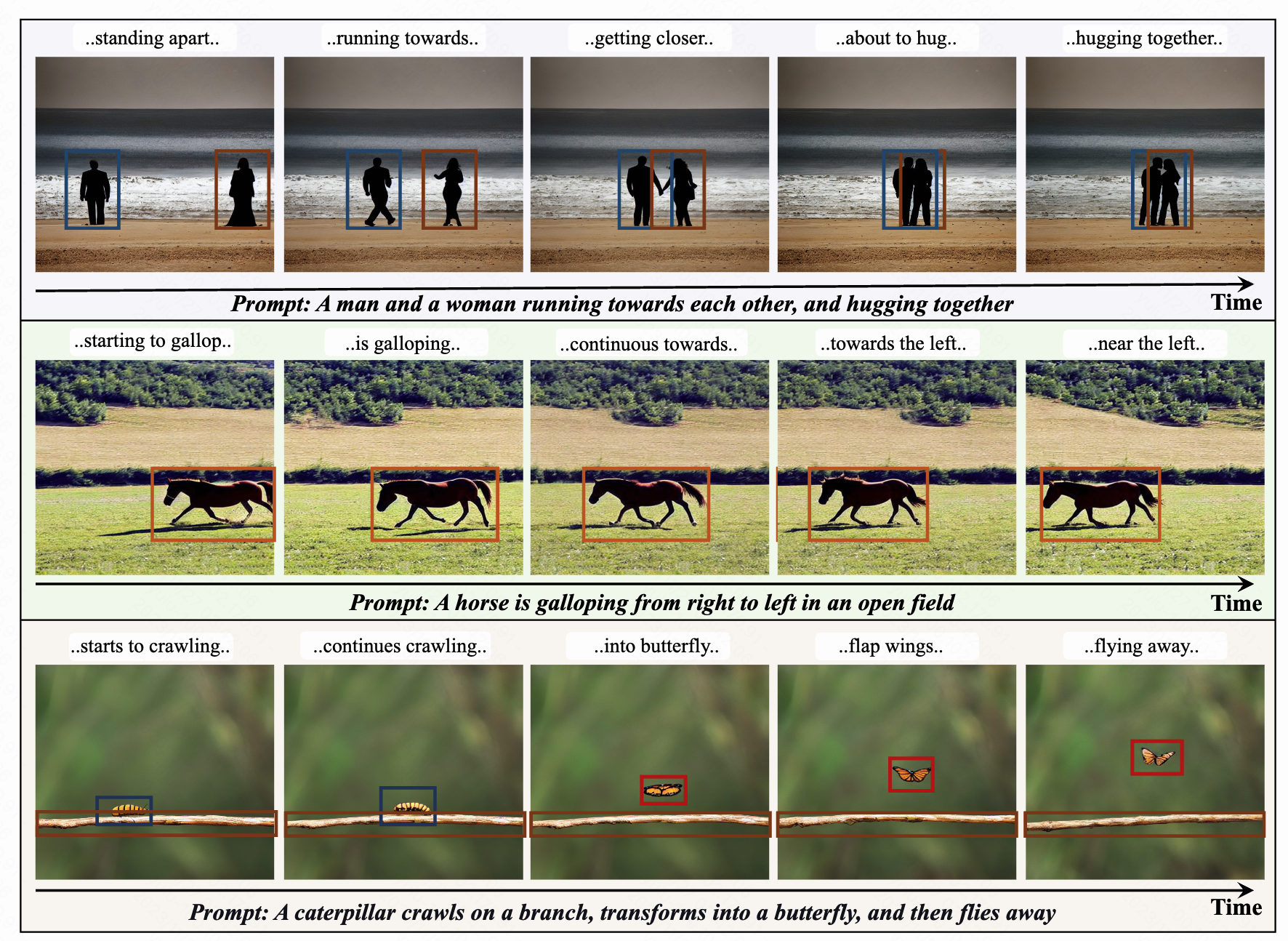

- Jan 2024: Paper on Zero-shot Video Grounding accepted by TIP 2024

Selected Publications

Professional Activities

Journal Review:

TPAMI, TIP, TMM, KBS

Conference Review:

CVPR, ICCV, ECCV, ACL, NeurIPS, ICLR, ICML